This is the final project for the course Robotics & Automation at VinUniversity. The project aims to design a robot hand that can be controlled by tracking the human hands. The team decided to take two approach for this, one would be to use the laptop's webcam, and the other would be to use the hand-tracking feature on Meta Quest 3.

Support with Robot Assembly.

Support with Robot Assembly.

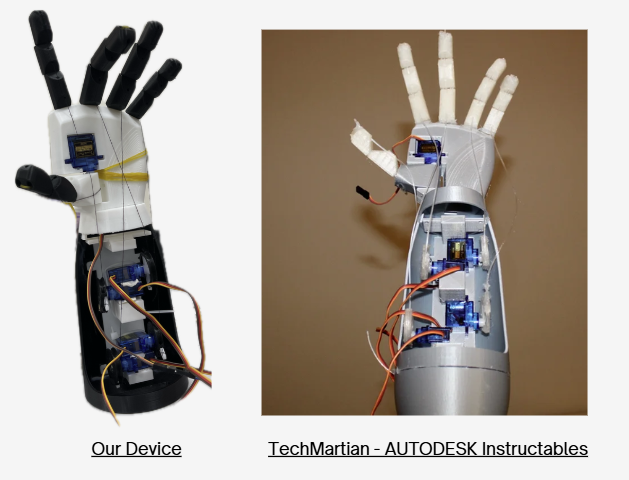

Due to lack of time in this project, our team decided to create the robot hand using an open-source design to focus more on the development of the tracking feature. The team chose the simple design from TechMartian [1] for this project. From the files available, we printed out the necessary parts, then assembled the robot hand using rubber bands, strings, and superglue.

The robot hand use 5 sg90s servo motors to control the fingers. We control the servos using an Arduino Uno.

The servos are connected to the finger tips via strings. When the servo pulls, the string pull the fingertips closer to the palm, making the fingers curl.

Rubber bands are installed inside the fingers, so that when the servos release the string, the rubber bands will pull the fingers back to the initial positions.

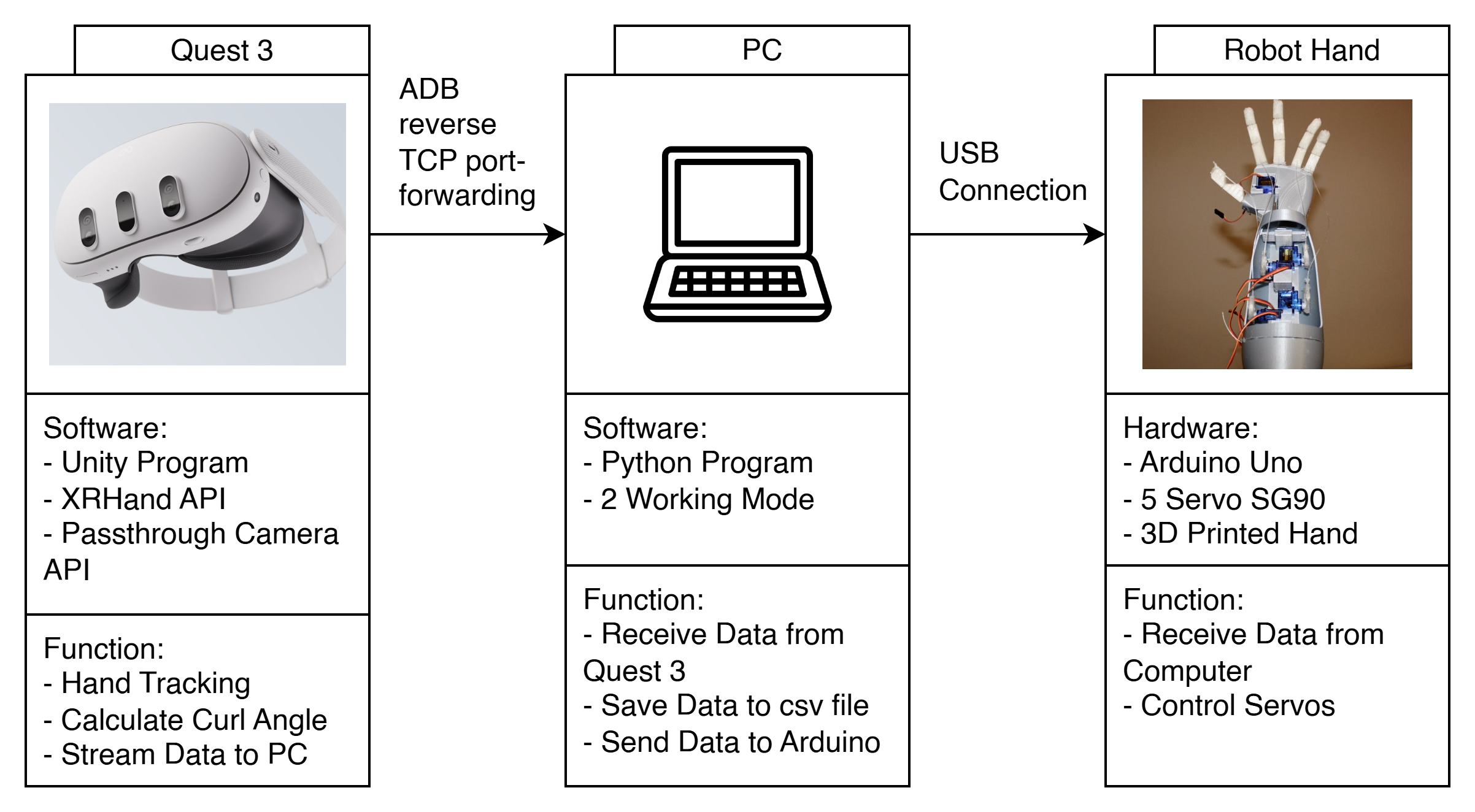

On Quest 3, we created a software with Unity 3D (version 6000.1.5f1) to access the hand-tracking data and stream data to the computer via ADB reverse TCP port-forwarding.

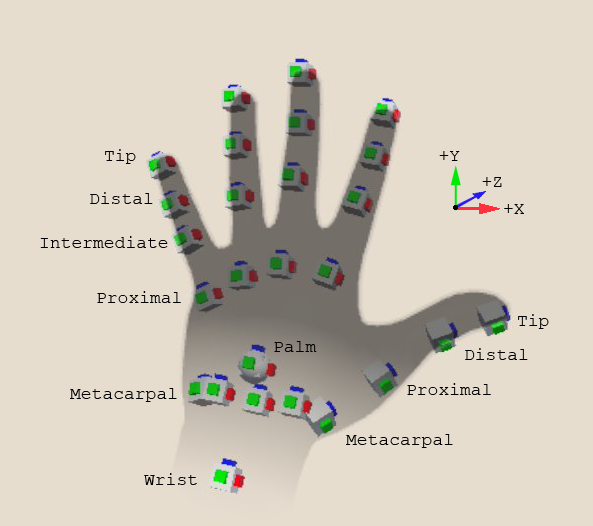

In this part, we use the XR Hand API [2] and the Gesture Example from the same API. The XR Hand API gave us access to the positional and rotational data of the joints of our fingers.

From this, we calculate the curl angle of the fingers using the data of the intermediate joints, the proximal joints, and the metacarpal joints.

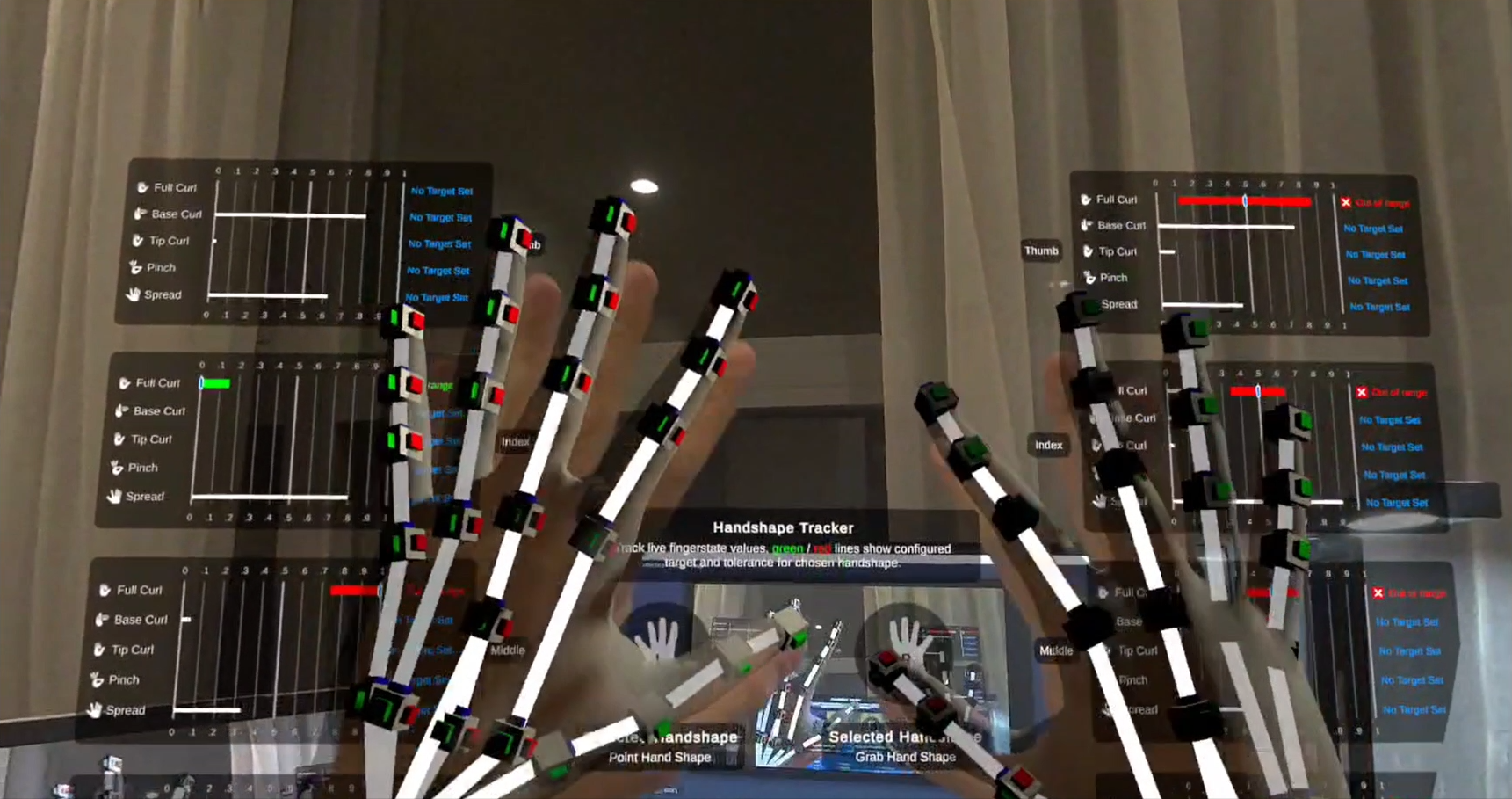

We use the Gesture Example from XR Hand API for the UI overlay on our fingers. This overlay help us to identify if the hand-tracking feature is working properly, and also it looks cool.

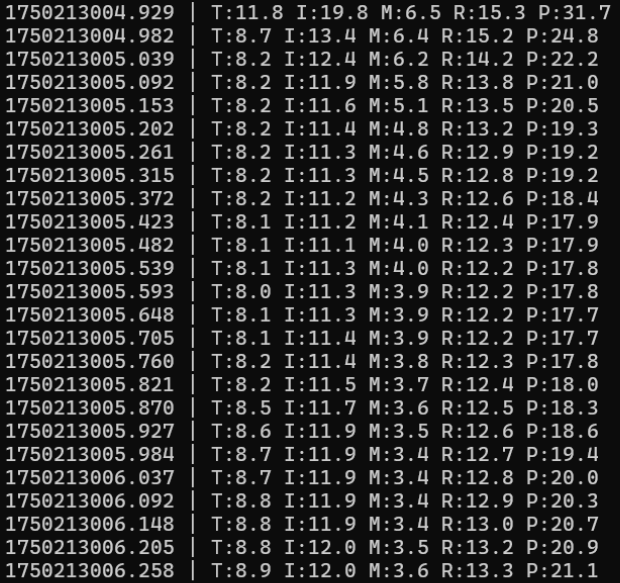

The curl angle of the fingers is sent to the computer via Serial connection, and a python program reads these values.

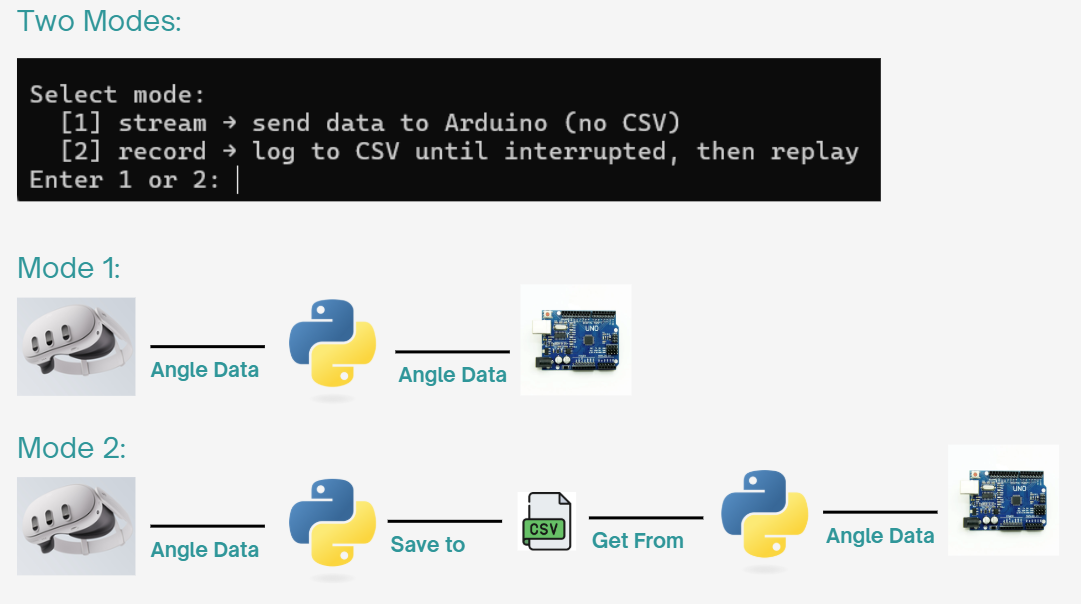

The python program have 2 modes: stream data real-time, or record data to a csv file, then replay it to the hand.

In Mode 1 - Real-time Streaming, the Python program immediately echoes the received data to the Uno, causing the robot to mimic the human hand in real time.

In Mode 2 - Pre-recorded Streaming, the data is written into a csv file. When the user stops recording, the data is stream to the Uno. The program can replay these data to the Uno in defined loops, from 1 times to loop infinitely.

This is a short project that gave me hands-on experience with teleoperation using VR technology with Quest 3. In a short period, I has been able to familiarize with VR development tools and APIs. My knowledge on Python, Human-Robot Interaction, as well as connections and communication between different components of an engineering system is deepened.

[2] Unity Technologies. (2025). Hand data model (Version 1.5.0) [Unity XR Hands documentation]. From https://docs.unity3d.com/Packages/com.unity.xr.hands@1.5/manual/hand-data/xr-hand-data-model.html